Summary of the Google Algorithm Leak

The recent Google leak has provided us with additional information about the Google algorithm.

The 14,000 variables give us an overview of various elements influencing the search rankings. This leak highlights the complexity of the overall algorithm and its multiple layers.

Google patent for web search scoring

Most elements highlighted in the different analyses confirm assumptions made by the SEO community.

Some of these variables are new to us. Other factors we expected to be found are absent from the repository.

The leak mostly reinforces SEO considerations and highlights certain factors that Google previously denied, such as using user data in search engine algorithms.

Adapting SEO strategy in light of this information

For most businesses and marketing teams, this leak shouldn’t change their modern SEO approach of building great user experiences and content rather than chasing the algorithm.

Focusing on driving more qualified traffic to a better user experience will signal to Google that your page deserves to rank.

In short, SEO is now one step closer to UX/CRO than paid search.

- Branding is more important than ever.

- User data means that content, links, and signal decisions should shift from a pure SEO focus to UX, CRO, and user journey optimisation.

- Content usefulness, originality, freshness and effort will influence your rankings and should help users convert. Traffic is nice; revenue is better.

- Topical authority matters both for humans and bots. Make sure your useful content covers the entire user journey.

- Links are still relevant; focus on quality and diversity if you want to continue to rank.

Note: Not the Full Algorithm

The Google Search algorithm has not leaked. The leak relates to an API for Document storage and retrieval in the cloud, not organic search algorithms. However, it shows details about the data Google collects to rank sites.

SEO experts don’t suddenly have all the answers. But the information that did leak is an unprecedented look into Google Algorithm’s inner workings.

While some of the ranking factors are included, the critical piece missing is the weighting and processing of each factor.

Also, it is not certain that all these items are used in the current algorithm, even if the document appears to be recent.

Unanswered questions:

- How much of this documented data is actively used to rank search results?

- What is the relative weighting and importance of these signals compared to other ranking factors?

- How have Google’s systems and use of this data evolved?

Most Interesting Findings from the Google Algo Leak

UX & User Engagement Metrics

- Click data and Chrome data are in use. We imagine this extends to Android devices as well.

- NavBoost – Quite a few possible tests on user engagement, Click Through Rate and measure of potential traffic capture instead of search volume

- Concept of usefulness, not just content quality

- Duration of NavBoost data series (13 months)

- Font size matters for links and content

- User metrics are used in Panda – Test pruning site by cutting pages with poor or no engagement. Our ScalePath.ai platform can help identify pages to fix or remove

- Image clicks are used to evaluate image quality

Content

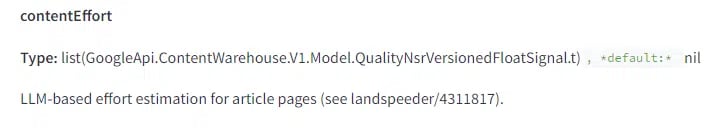

- Content effort score is a variable via pageQuality

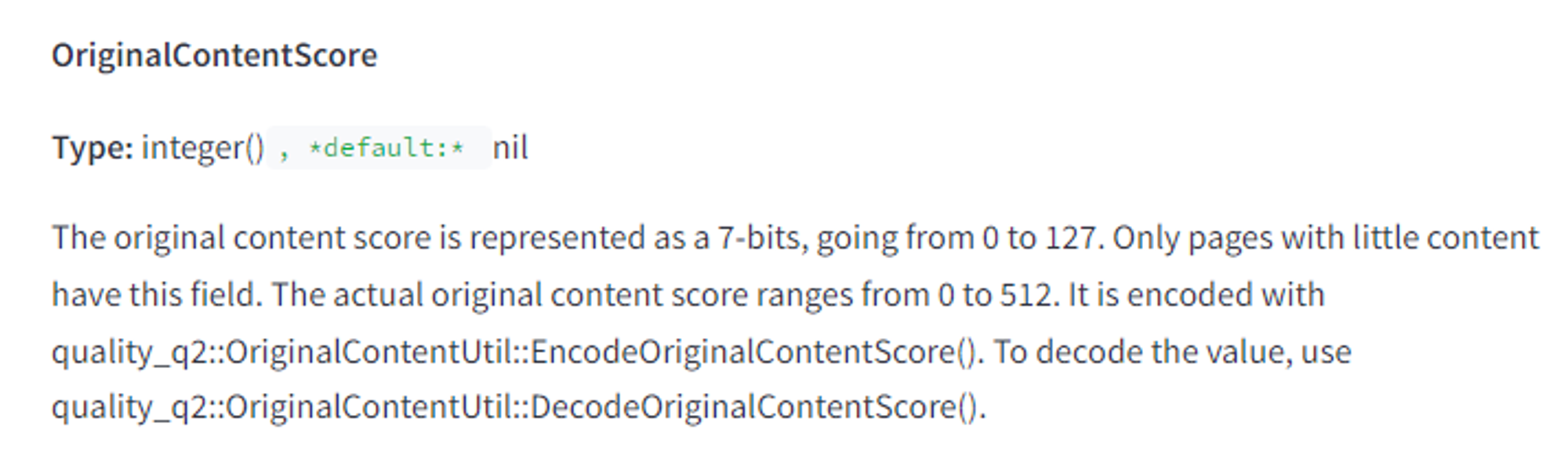

- OriginalContentScore suggests that short content is scored for its originality. Try on category pages.

- Content freshness (“bylineDate”, “syntacticDate”, and “semanticDate”) ensure consistency across all systems (HTML, structured data, XML sitemap)

- Max size for content – numTokens suggest content can get truncated.

- siteFocusScore – how well a site sticks to a single topic.

- siteRadius – Measuring a specific page against topical affinity at site level. i.e. If your site is about credit cards, should you be ranking for “best fridges”?

- Mention of Gold standard documents. Need to dig further.

- isAuthor – treat authors as entities.

Brand awareness, Authority, Signals, Mentions

- Brand and clicks and visits is what we should look for.

- Brand-related signals and offline popularity as ranking factors.

Links

- Click data influences how Google weighs links for ranking purposes.

- Domain Authority is a thing.

- OnSiteProminence – Links from new and trusted pages and link freshness.

- Links from higher up in the page seem to have an influence.

Other

- There is strong conjecture that Google uses the last 20 versions of a page stored to evaluate changes for links.

- Demotion – a good way to run a quick laundry list to ensure nothing is dragging performance down.

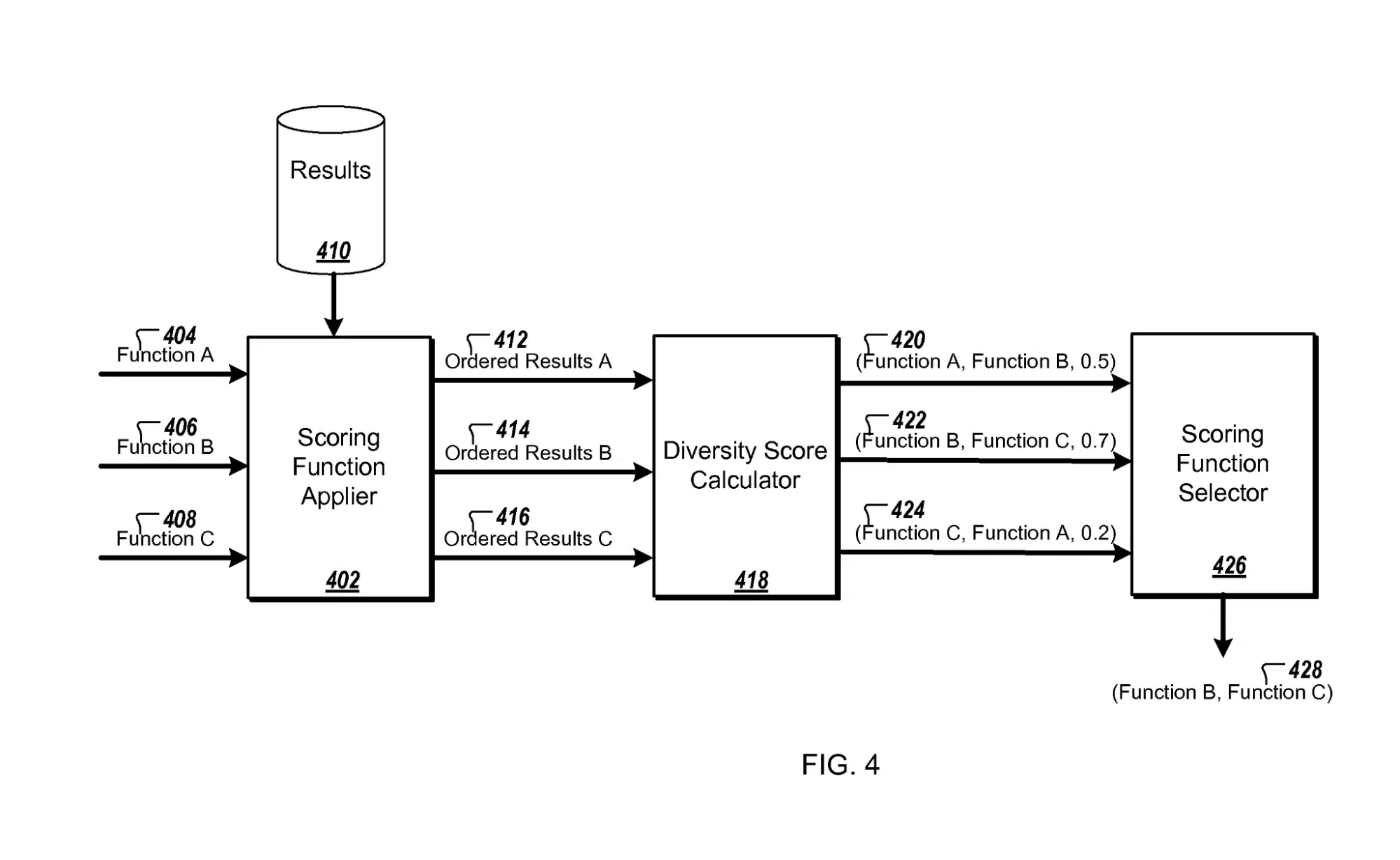

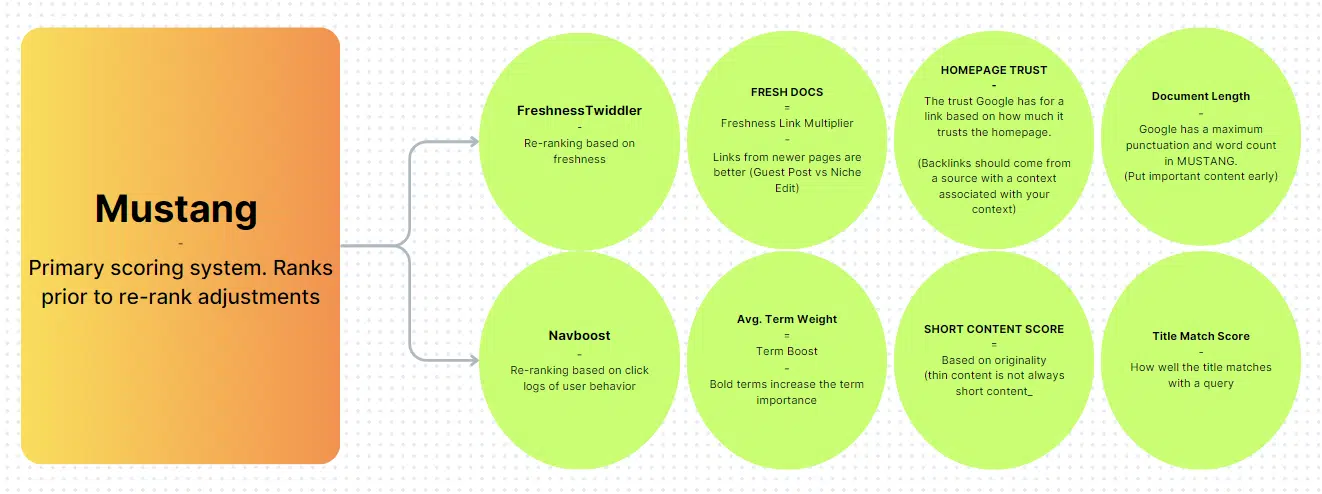

- Use of Ascorers and Twiddlers to adjust rankings ‘on the fly’.

- Sites with >50% videos are treated differently.

What to Test

This leak underscores the importance of using a test-and-learn approach to SEO, particularly for large sites and enterprise SEO.

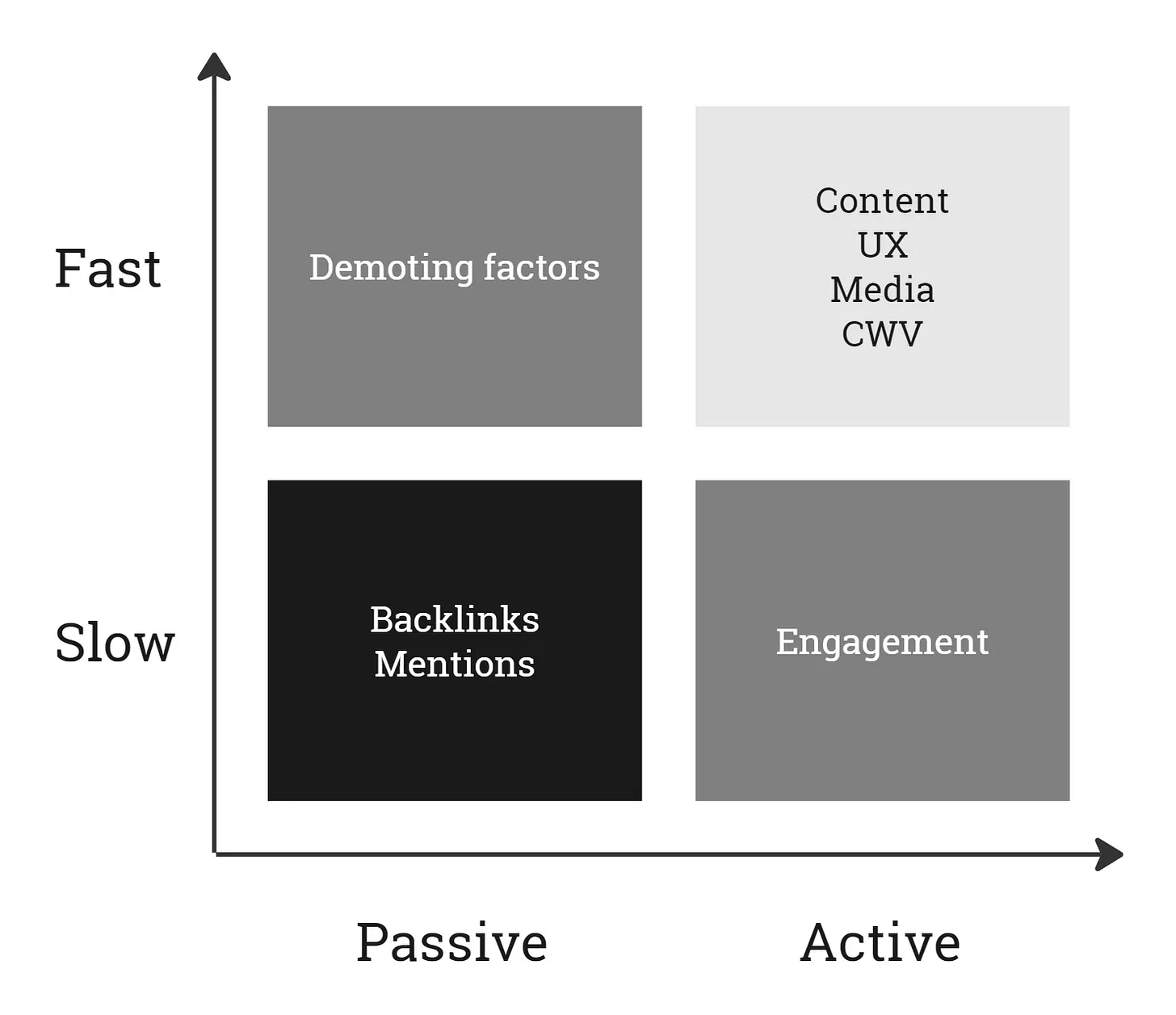

The leaked document, spanning over 2,500 pages, suggests four distinct groups of tests we can conduct.

Credit: Kevin Indig Growth memo

Content, user experience, media, and core web vitals will likely yield rapid results, albeit requiring some effort. Identifying and eliminating potential demoting factors can also enhance performance at a site level or specific sections, like templates.

Authority signals such as backlinks and mentions are present but are costly and challenging to test on a large scale. Nonetheless, sound digital PR, branding, and authority fundamentals can boost overall marketing performance, even under non-ideal test conditions.

Some ranking factors, like domain authority, are site-wide, while others are page-specific, such as click-through rate.

UX & Engagement

Common areas to test and validate include navigation, time on site, engagement, task completion, font size, image quality, and ease of use.

Engagement in search results, like CTR, is crucial to ensure content matches user intent, particularly titles and descriptions.

Note: Regional variations in SERPs (EU vs AU vs US) exhibit different behaviours and patterns. For example, a 3% CTR might be acceptable in the US but could be better in EU markets.

Improving internal cultural action and user engagement can enhance user task completion’s challenging yet vital goal.

When evaluating engagement, the search type and the composition of search results should also be considered. For example, does Google limit the number of specific types of websites, such as e-commerce sites, blogs, videos, and images?

Content

The document reaffirms the importance of content freshness and quality as ranking factors.

Embeddings for content, topics, and authorship are highlighted as ranking factors. The frequency of content changes and the date of content publication and refresh are important.

Authority Signals

The document highlights authority signals such as brand mentions, domain authority, and links from new and trusted pages.

A key revelation from the leak is the importance of domain authority and homepage trust or rank, especially for new pages.

Demoting Factors

Parallel strategies can be used to remove demoting signals. Improving content quality can enhance user engagement. Testing and improving poor navigation, poor user signals, and overused anchor text are recommended.

Testing Framework

SEO testing should ultimately lead to increased revenue and profit. Most testing signals are SEO metrics that don’t directly correlate to visits, particularly for pages not currently ranking highly. Metrics may include user engagement, page quality, and the ratio between indexed and all indexed pages.

Where to Test

Some signals, like domain authority, are passive or require extensive testing time. We recommend using them as guidelines rather than clear A/B Speed Test components. Testing can be performed at a country or template level for aspects like content quality, depth, decision levels, and freshness.

We also suggest conducting tests for specific keywords rather than all keywords in a page patch type.

SEO Strategy Evolution

SEO strategy is now more than chasing the algorithm and includes responsibilities better matched with inbound marketing work.

Experimentation in SEO

Given the variable ranking systems, SEO practices must be based on testing and experimentation. Test SERP, content structure, and metadata positioning to improve click metrics.

Integration of SEO and UX

NavBoost shows Google highly values clicks. Multiple types of user behaviour can signify success, such as a single click with no follow-up search or extended page visitation. Enhancing UX can increase successful sessions, so structure content effectively to keep users engaged.

Understanding Click Metrics

Google’s ranking systems use Search Analytics data as diagnostic features. Poor click rates, despite high impressions, may signal issues. Falling below performance expectations based on position could result in ranking loss.

Focused Content

Google utilises vector embeddings to assess how content fits with your overall topic range. To succeed in upper funnel content, structured expansion or proven author expertise is needed.

Post-Exit Analysis

Google employs Chrome data as part of the search experience. Analysing post-visit user behaviour could provide insights on how to keep users on your site longer.

SERP Format-based Keyword and Content Strategy

Google might limit SERP rankings for certain content types. As part of keyword research, check SERPs and avoid aligning formats with keywords if ranking is unlikely.

Tactical SEO Changes

Unrestricted Page Titles

The 60-70 character limit is a myth. Adding more keyword-driven elements to the title can attract more clicks.

Fewer Authors, More Content

Work with fewer, but more focused and experienced authors.

Link Relevance Over Volume

Links from pages prioritised higher in the index and relevant links are more valuable.

Prioritise Originality Over Length

Originality can improve performance. Not all queries require extensive content, focus on originality and update as competitors copy.

Date Consistency

Ensure dates in schema, on-page, and in the XML sitemap are consistent.

Cautious Use of Old Domains

Old domains require careful content updating to phase out Google’s long-term memory of the previous content.

High-Quality Documents

Quality raters help train Google’s classifiers, so create high-quality content to influence future core updates.

Action items

For all these actions items, we recommend applying a test-and-learn approach. The speed at which you can roll out and measure an experiment will determine your SEO success.

User Experience

- Focus on driving more qualified traffic to a better user experience measured by CTR, Time on site, Bounce rate.

- Aim to improve the completion rate of tasks by users on your site. This could relate to filling out a form, making a purchase, or any other key action you want users to take.

Keyword Targeting & Selection

- Drive more successful clicks using a broader set of queries.

- Evaluate your keyword strategy. Ensure that you’re targeting a diverse set of queries and that your chosen keywords align with user intent.

- Test for specific type of keywords rather than all keywords in a given page type.

Content

- Ensure content usefulness (move to the next action) by tracking bounce rate or scroll rate and objective completion.

- Consider the user’s intent when creating and optimising content. Make sure your title and description accurately reflect the content of the page.

- Be mindful of the diversity limits of content formats when selecting keywords to target.

- Keep the most important items at the top of large content pieces.

- Optimise headings around queries with short sharp paragraphs answering those queries clearly.

Topical Authority

Clicks and impressions are aggregated and applied on a topical basis.

- Write more content that can earn more impressions and clicks.

- Ensure full or extensive coverage of a given topic. Consider using hub and spoke model.

- Consider removing topically non-relevant pages.

Freshness

Irregularly updated content has the lowest storage priority.

- Create a plan to regularly update your content, ensuring its freshness and relevance.

- Seek ways to update the content by adding unique info, new images, and video content.

- Use this as an opportunity improve “effort calculations” metric.

Links

External Links & Brand Mentions

- Earn more link diversity to continue to rank.

- Look for links likely to drive traffic (click and user data).

- Include brand mentions as part of your Digital PR.

- Boost brand recall.

- Likely to be used by and referencing in AI powered searches.

- And improved rankings.

Internal Links

- Aim for links from trusted and high-ranking pages.

- Build links likely to help create topical bridge.

- Test: link freshness. Consider link module with a refresh rate on top pages.

Technical SEO

- Remove demoting signals like poor navigation, poor user signal, and overused anchor text.

- Knowledge graph is setup and complete for each key entity in your business.

- Ensure structured data and schema markup is setup.

- Consistent timestamps across XML Sitemap, Schema mark up, publish date, content, URL.

- Ratings: run online reputation management campaigns to improve ratings of product.

- Consider removing from inventory poor performing products.

Conclusion

Reconsider SEO best practices based on these insights. Keep what works, discard what doesn’t.

Work with management to transition SEO responsibilities, resources and KPIs towards inbound marketing ones.

Let’s Deep Dive into Specifics on…

User Data

User data is a signal.

Previously denied by Google reps, the leak and testimonials by Google executives confirm that user data (clicks, Chrome data) are part of the traffic set.

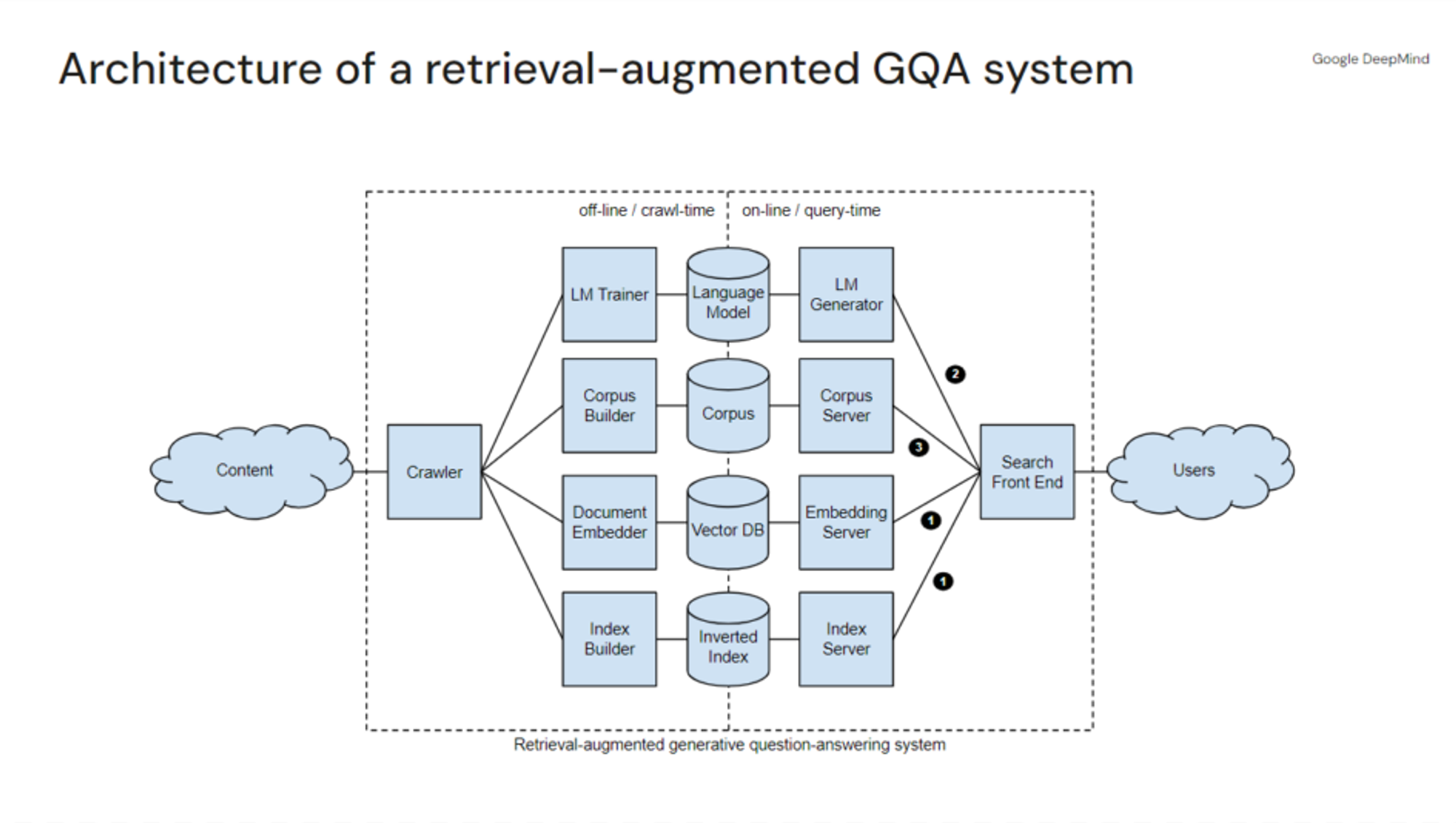

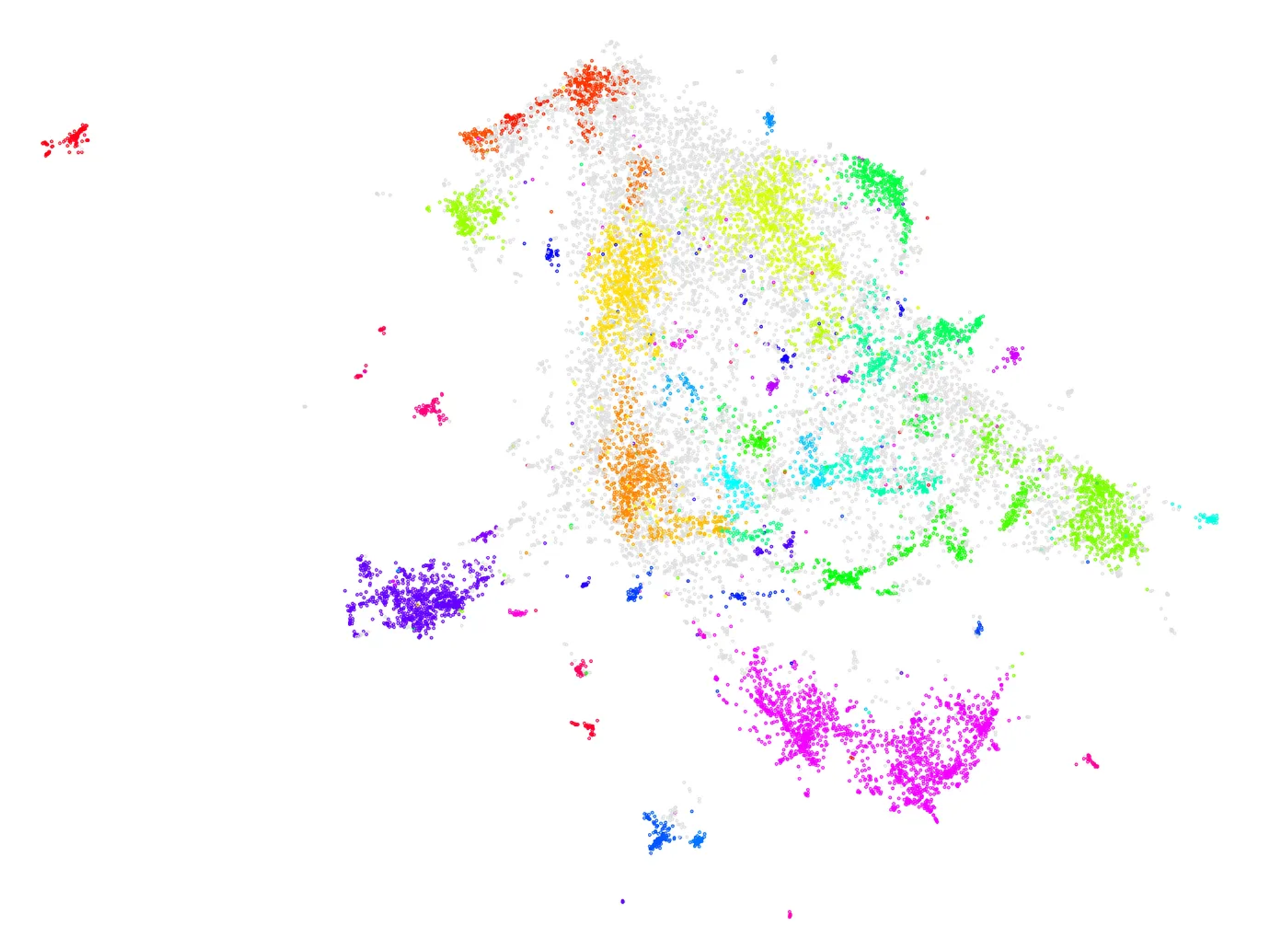

We may consider the Google algorithm as one giant equation with a series of weighted ranking factors. In reality, it’s a series of microservices where many features are preprocessed and made available at runtime to compose the SERP.

Based on the different systems referenced in the documentation, there may be over a hundred different ranking systems.

Twiddlers

Twiddlers are used for on-the-spot ranking.

Twiddlers are scoring factors on top of the main algorithm to boost or demote certain sites or pages.

Some of the boosters identified:

- NavBoost

- QualityBoost

- RealTimeBoost

- WebImageBoost

Twiddlers are re-ranking functions that run after the primary Ascorer search algorithm.

NavBoost

NavBoost is used at the host level to evaluate a site’s overall quality. This evaluation can result in a boost or a demotion.

It is mostly made of click data, such as the longest click from the SERPs (equivalent to dwell time) and the last good click (the last time someone went to your site and stuck around).

NavBoost tracks clicks over 13 months. Thus, any change would need to be sustained over a long period.

Content Factors

Topic Authority, Keyword Clustering and Relevancy via Embeddings

Google employs page and site embeddings, site focus, and site radius in its scoring mechanism. Page and site embeddings assist Google in discerning the context and relevance of the content. Site focus gauges how intensely a website is dedicated to a specific topic, while site radius assesses the extent to which the content of individual pages deviates from the overall theme of the site.

These metrics, coupled with various uses of click data (good clicks, bad clicks, long clicks, and site-wide impressions), are pivotal in determining search rankings.

By using these factors, Google is able to rank pages accurately based on their relevance, quality, and user engagement.

- siteFocusScore denotes how much a site is focused on a specific topic.

- siteRadius measures how far page embeddings deviate from the site embedding. In plain speech, Google creates a topical identity for your website; every page is measured against that identity.

- siteEmbeddings are compressed site/page embeddings.

The concept behind these three elements is a site receives a particular score based on its core topic, and each new page and keywords are evaluated against it.

In the ranking signals analysed it appears that the embedding is also at a site-wide level, which means that a given set of keywords and topics will have any of the new pages’ new topics judged against the distance from that topic.

For example, a site predominantly talking about flights and known for flights may struggle to rank for hotel keywords as the volume of authority signals and content known to Google is far fewer than airline flight-related queries.

Creating a hub and spoke model around new topics, new patients, or key pages will be required to drive additional relevancy and add the embedding of a new set of keywords associated with the site.

Keyword stuffing score

Conversely, the algorithm includes keyword stuffing score. Ensuring semantic relevancy will benefit a page for embeddings and reduce the risk of keyword stuffing score.

Quality Rating Based on the Estimated Effort to Replicate Content

PageQuality (PQ) is where Google employs a large language model (LLM) to gauge the “effort” invested in creating article pages.

Factors contributing to higher effort scores include the use of tools, images, videos, unique data, and the depth of information offered, all of which are known to increase user satisfaction.

The emphasis on LLM-based effort appraisal underscores Google’s commitment to recognising and rewarding high-quality, original content. Pages that exhibit considerable effort in content creation and presentation stand a better chance of achieving higher rankings.

For instance, even though this article is content-rich, it primarily summarises findings from another source, which would result in a lower Page Quality rating.

Content Quality and Answer to User Query

Terms related to that query should only be included if the page is likely to rank well for such terms and matches user intent. The algorithm also considers content data and unique information.

Content Effort Score May be for Generative AI Content

Google is attempting to determine the amount of effort employed when creating content. Based on the definition, we don’t know if all content is scored this way by an LLM or is just content they suspect is built using generative AI.

Originality Score for Low Content Pages

OriginalContentScore is used to evaluate the originality of a content piece, especially for short content.

This could allow long-tail pages to have unique content that others do not display.

Most product pages, categories, subcategories, or localised pages usually have limited content.

Being able to extract data from local entities will ensure that there is not a replica of all the pages on the web and can yield strong results for some of the most valuable pages.

Dates (i.e. Freshness) are Very Important

This highlights the importance of having data embedded into the content produced. Content freshness is (very) important. But good quality content is rarely fresh.

One option to bring freshness to your page is by embedding content segments based on available data (i.e. programmatic content connected to a database). Another option is regularly refreshed generated content with rag retrieval augmented generation, including expert tips and advice with clear authorship. This can be tested to evaluate the impact on rankings.

Our experience working with large dynamic sites suggests that even the smallest amount of live data becoming stale can significantly impact ranking.

Example: one of our templates had live pricing data. This live pricing data module broke, and after approximately a week, a whole template of ranking high-value popular pages quickly dropped in performance.

It suggests that having information from either external data sets or internal information databases can help create fresh content likely to drive positive experience for users and be rewarded by Google.

Date consistency is important.

Data consistency across lastmod date in XML sitemaps, URL, content, and publish date is key.

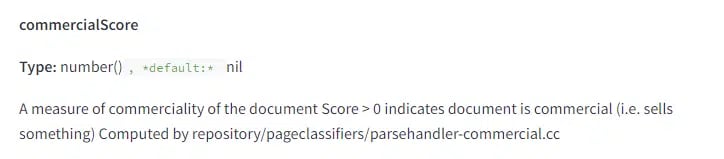

Google Detects How Commercial a Page is

We know that intent is a heavy Search component, but we only have measures of this on the keyword side of the equation.

Google commercialScore can be used to stop a page from being considered for a query with informational intent.

Consolidating informational and transactional page content to improve visibility for both types of terms may work to varying degrees, but it’s interesting to see the score effectively considered a binary based on this description.

Authorship

Authorship signals are included in the ranking factors. Markup and expert author profiles should be tested on different page types, especially for keywords related to “best”, “top”, “reviews”, but also for navigational queries and “how to” information.

Documents Get Truncated

Google truncates documents based on the maximum number of tokens. Ensure the most valuable information and links are placed at the top of the content.

The Significance of Page Updates is Measured

The significance of a page update impacts how often a page is crawled and indexed. This feature suggests that Google expects more significant updates to the page.

Google Stores Versions of Pages Over Time

Like archive.org, Google stores different versions of a page and can analyse the current version compared to previous content. This factor should be considered for content freshness and redirects.

The volume of content changed is also considered in page version.

Video Sites are Treated Differently

Google has a boolean evaluation of a site on whether more than 50% of the URLs have videos. Sites with more than 50% of video pages will be considered differently.

With the rise of AI Overviews, a lot of businesses are considering using videos as a medium to still be visible and also increase the overall quality of their pages.

Other Factors

The Specific Score for YMYL

Google has a specific classifier for health, finance and your money, your life type information, and news.

Quality Travel Site Module

The Travel Module is a specific ranking factor that determines whether a travel-related site is a hotel, attraction, or aggregator.

The score has a specific section for Aggregators. We assume it differentiates with official service providers (usually small businesses) getting a boost compared to aggregators.

Links

Indexing Tier Impacts Link Value

Links from High-Value Pages

There appears to be a loose relationship between a page’s indexing location and its value. If a page receives links from a high-ranking, freshly indexed, high-value page, this link is more likely to add more value.

This impacts internal page inflow or external links more than a stale page. This matches the setup from our current model for our proprietary ScalePath.ai algorithm.

Pages that are considered fresh are also considered high quality.

New pages use Homepage PageRank and Domain Authority

This and siteAuthority are likely used as proxies for new pages until they have their own PageRank calculated.

Link Spam Velocity Signals

Google can measure the speed at which link spamming occurs. It is likely to nullify a negative SEO attack.

Font Size of Terms and Links Matters

Font size for terms and anchor text is still a signal. This could be a factor to prevent technical issues from using footnote-style links and instead reward content and anchors/links that are high on the page and are highly visible.

This presents a problem to the user when trying to enlarge the number of pages on each page.

🧪 Test idea: Obfuscate or remove links that are typically hidden or not very visible on the page to see if target pages are affected. If not, it means that internal means may not drive sufficient authority.

💡 Creating link modules at the top of the page for the most valuable pages that show the next best action based on user data (i.e., internal searches, next page navigation) can improve user experience and rankings.

Composition of the Search Results

Google can specify a limit of results per content type.

In other words, they can specify only X number of blog posts or Y number of news articles appearing for a given SERP.

Understanding these diversity limits could help us decide which content formats to create when targeting keywords.

For instance, if we know that the limit for blog posts is three and we don’t think we can outrank any of them, then maybe a video is a more viable format for that keyword.

Demotions

- Product Review Demotion: This is significant for eCommerce sites and aggregators. It implies that if a site is giving higher reviews for underperforming products, or lower reviews for high-performing products, it may need to adjust its strategy. It’s also worth considering whether to include products with low ratings in the inventory or mark them as “no index” so they are not shown to Google.

- Location Demotion: Global and super global pages may be demoted. Details are scarce, but it may suggest that Google prefers local results over global ones. Thus, a country-level domain or a localised page with authentic local content may generate better search results.

Demotions associated with dubious tactics and low-quality sites are:

- Anchor Mismatch: If a link does not align with the target site it’s linked to, the link is demoted in the calculations. Google seeks relevance on both sides of a link.

- SERP Demotion: This is a signal for demotion based on factors observed from the SERP, indicating potential user dissatisfaction with the page, likely measured by clicks.

- Navigation Demotion: This is likely a demotion applied to pages showing poor navigation practices or user experience problems.

- Exact Match Domain Demotion: In late 2012, Matt Cutts announced that exact match domains would not be as valuable as they used to be. There is a specific feature for their demotion.

- Porn Demotion

Other link demotions:

Link Spam Velocity

Several metrics are related to this. These metrics may be important for guarding against negative SEO. If a page gets a large number of spam links quickly, Google may demote points to that page to counter a negative SEO attack.

Domain Expiry

Google monitors when domains expire to detect and penalise expired domain abuse.

Various demotions have been identified, mostly related to deceptive SEO tactics. However, product review and location demotions are also notable.

Conclusion

Remember, this algo leak should not change the way we do SEO. Best practice SEO still remains user-focused. However, the leak does give us additional insights into Google’s algorithm and provides some insight into what we can test.